Originally published on Medium on the October 2, 2018.

Interestingly, not all websites are hosted on the cloud, which helps in managing heavy server load. Some of us still use VPS hosted PHP based frameworks, using CMSs like Drupal/Wordpress. And sometimes, we get requests to expose our APIs to enable our shiny new mobile apps (this has happened). And when that happens, our servers crash and API response times go above 10s. And that’s when our bosses come thundering down on our unlucky selves to fix the ‘slow app’.

In those times, we’re sort of forced to wear our ‘Technical’ caps. Product managers usually rely on technical teams to assist on these things, but sometimes, a nudge in the right direction. You’ll find below, a nudge in that direction.

Gathering data

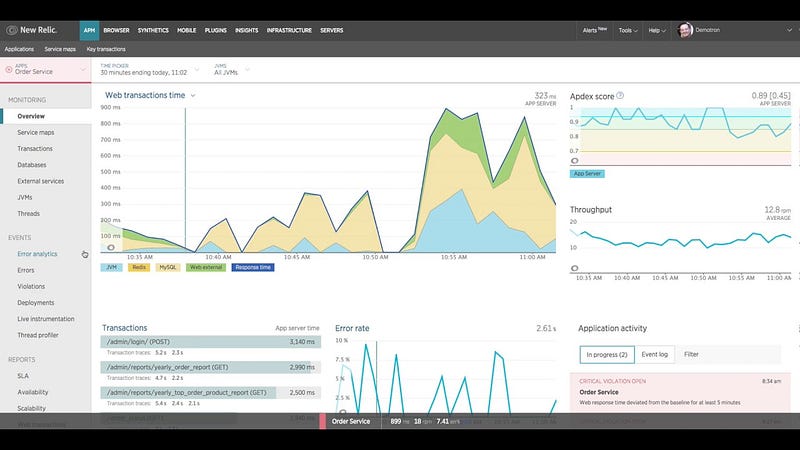

Before you begin on anything, install Newrelic. Newrelic free version gives you enough insights to know API timings. The installation is pretty simple.

Through this, you should be able to get the following:

- Most requested requests/transactions

- Most time consuming requests/transactions

- Most erring requests/transactions

…with their relevant timing values.

Redraw your architecture

Your current architecture is simple:

This is a fairly basic setup you’ll have. A server that handles majority of the load with a dB that has (hopefully) been externalized.

Server load types

Let’s break down your server load into following types:

- Static API calls — Calls whose response doesn’t change in a quantum of time. The quantum could be 15/30/60 mins. Usually configuration calls. E.g. The configuration call for mobile app requires a database request to fetch the latest value. However, your mobile app configuration doesn’t change much over a period of time.

- Dynamic API calls — Calls which are user specific (like getMemberDetails) but the response doesn’t change for a quantum of time.

- Realtime API calls — Calls which require a constant check with the database to ensure realtime data. These could be checkMemberLoginStatus, getApiStatus etc.

- Outbound API calls — Calls which make another call to update some value or trigger some communication. E.g. the API call to send SMS/Email fall under this category. They’re usually a fire & forget call.

Categorize your API calls according to the above and associate a Quality of Service value against them, i.e., max miliseconds a response is considered as good service. Your breakdown could be:

- Less than 700ms — Realtime API calls

- Less than 1500ms — Transient Dynamic API calls

- Less than 2500ms — Static API calls

- Less than 4000ms — Outbound API calls

Compare with Newrelic to understand your current values and spacing.

You’ll notice that the static & outbound API calls are huge in number and take up a lot of the processing which should be devoted to Realtime/Dynamic calls. This is the problem we’re going to solve.

Enter Node.js + MongoDB

Now that we’ve got an understanding of our calls, we should focus on eliminating the bottleneck created by the static API calls. To do that, setup a nginx server on port 80/443. Nginx is lightweight reverse proxy that can be used as a router. Here is what you need to do:

- Install nginx. Move your apache to port 8080.

- Move the static APIs to a different URI — if your calls were /api/<apiName>, change the static to /api/static/<apiName>, the dynamic ones to /api/dynamic/<apiName>, the outbound ones to /api/outbound/<apiName> and the realtime ones to /api/realtime/<apiName>.

- Install nodejs on port 8081 and Mongo on the default port.

- In nginx conf file, route all traffic from /api/static/*, /api/outbound/* & /api/dynamic/* to nodejs on port 8081. Route realtime traffic to Apache.

- Install RabbitMQ. Setup a queue for the outbound calls.

On the node.js installation, you’ll need to create four files:

- router.js — this is to route the requests from each of the calls.

- requestHandler.js — this is for all the static/dynamic calls.

- mq.js — this is to pass the outbound requests to the message queue (RabbitMQ).

- outbound.js to handle the outbound request call.

On requestHandler, setup the following logic:

- Open mongodb connection.

- Create a document for each API request type (apiName).

- The key is the request object. The response is the value.

- Set an expiration on each request based on the request type. A safe value would be 15 mins for the static calls, and 100s for dynamic calls.

- You can set different expiry time for each API separately, depending on your business need.

- For each request, first check with the db if the request exists and isn’t expired. (mongodb has an auto expiry option — enable it).

- If the request has expired, make the call internally to get the value (log the value if you’d like)

- Else, send the response body in the response.

On the mq.js:

- Pass the request object to the MQ.

- Send success.

On the outbound.js:

- Handle the request.

- Wait for response.

- If response is failure, send the item to the back of the queue.

- Else, Close the connection

Usually, at this point, your technical team will intervene and speak of overloaded queues due to service downtime on the other side. That can be handled either with a variable stored in mongo that evaluates service status or letting the queue be. The queue can go big (We’ve seen 10,000 requests in the backlog due to an SMS service being down with only 50Mb used by the queue service and no downtime!). There is no visible downtime on the front end.

Conclusion

As I wrote before, this is a nudge. You’ve given the team something to think about, and to be fair, this is as far you’ll go and should go. Having the ability to think laterally and cross functionally is essential to the growth of a product manager.

In summary, you’ve effectively broken down the problem of overloaded servers into smaller, easier to deal with problems of request types — by understanding where the problem is, through NewRelic. And that is a key thing to learn when you’re out solving problems everyday — the biggest problems require understanding the smaller problems that make up the big ones and then solving the smallest problems. You solve the small things first, the big things will probably disappear!

How is your load being distributed? Have you tried the method above? Are you experiencing similar issues? I’d love to hear about it — drop me a line. Thank you for reading!