Originally published on The Startup on Oct 29, 2019

We’ve all read of the million transactions/sec systems of Google or Linkedin. And we’ve read about the scale of Netflix and its success with Microservices architecture (MSA). More often than not, we’re tempted to implement the same level of complexities within our systems, without understanding the complexities involved. But, do we really need a complicated architecture?

As Joe Hellerstein sideranted to his undergrad databases class (54 min in):

The thing is there’s like 5 companies in the world that run jobs that big. For everybody else… you’re doing all this I/O for fault tolerance that you didn’t really need. People got kinda Google mania in the 2000s: “we’ll do everything the way Google does because we also run the world’s largest internet data service” [tilts head sideways and waits for laughter]

However, the MSA is shiny and the boardroom loves ‘shiny’. It gives them some fodder to discuss with their counterparts- as a way to sound smart and important. ‘We’ve implemented microservices architecture. Our products can now handle over a million transactions a second!’, they say. Everybody claps.

Except for the developers who’re dreading the cavalcade of code maintenance complexity and testing issues. Not to mention the costs involved.

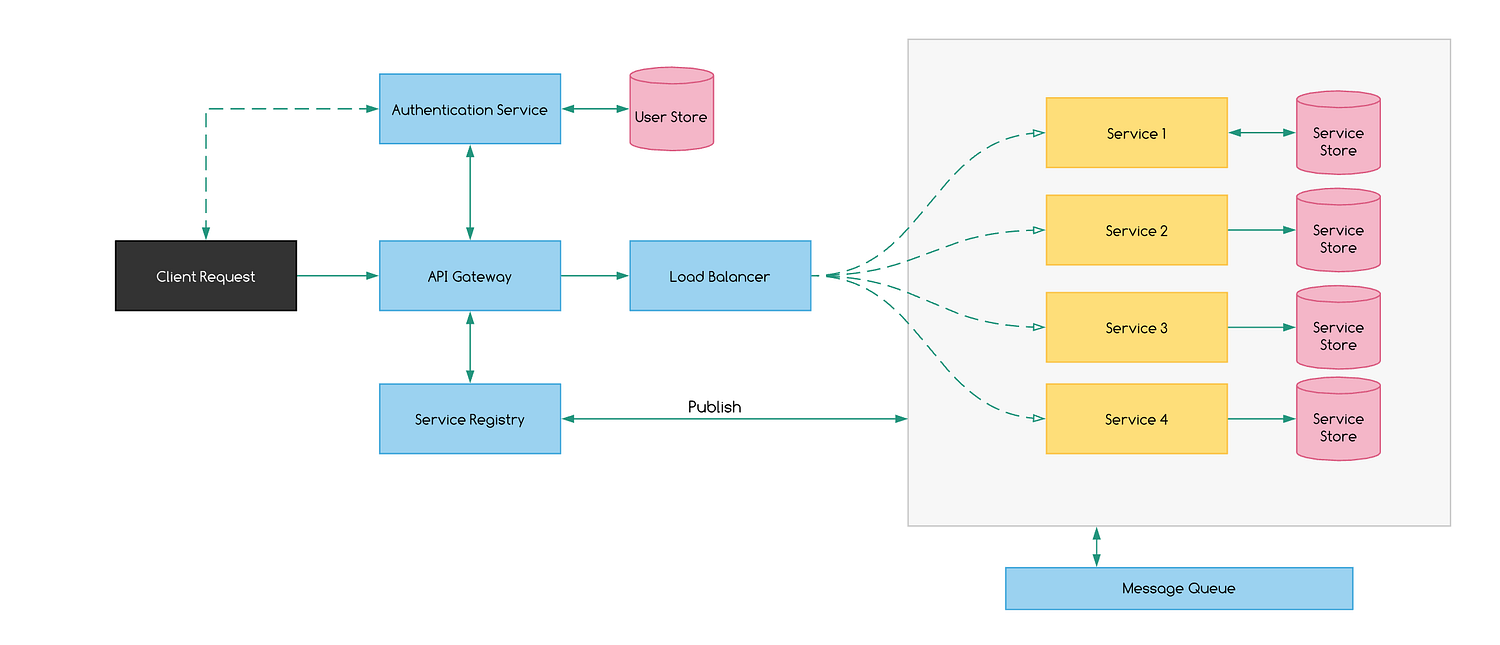

Mostly all the calls are based on REST API. Each service is maintained separately (with a small set of developers) and is independent of the functionality of the other services. Inter-service communication is handled through gRPC/REST/GraphQL depending upon the use case. Each service gets a service store (NoSQL database).

However, the majority of the retailers in the region do not really need a full-fledged MSA solution — the cost doesn’t really justify the benefit. Typical loads are 100/1000 reqs per sec requiring an avg response time of 1–1.2s. Spending dollars for maintaining these services on separate clusters with separate stores is not commercially viable.

Inflatable Microservices Architecture

Enter the Inflatable MSA — it’s a pseudo services-oriented (or microservices) architecture that respects the principles of MSA and allows for a seamless transition to a full-blown MSA.

Inflatable MSA is for small loads (~10k reqs per day) — where the organization will eventually evolve to some level of complexity justifying MSA.

Here are the principles:

Principle 1: Modular Code — Monolithic deployment

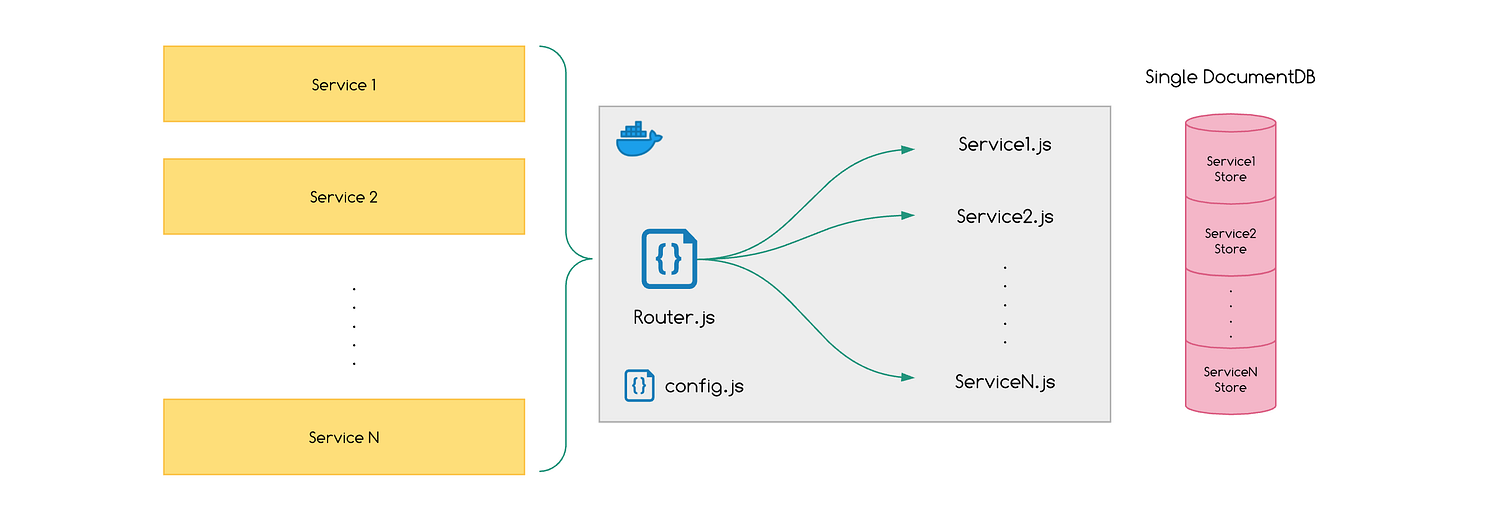

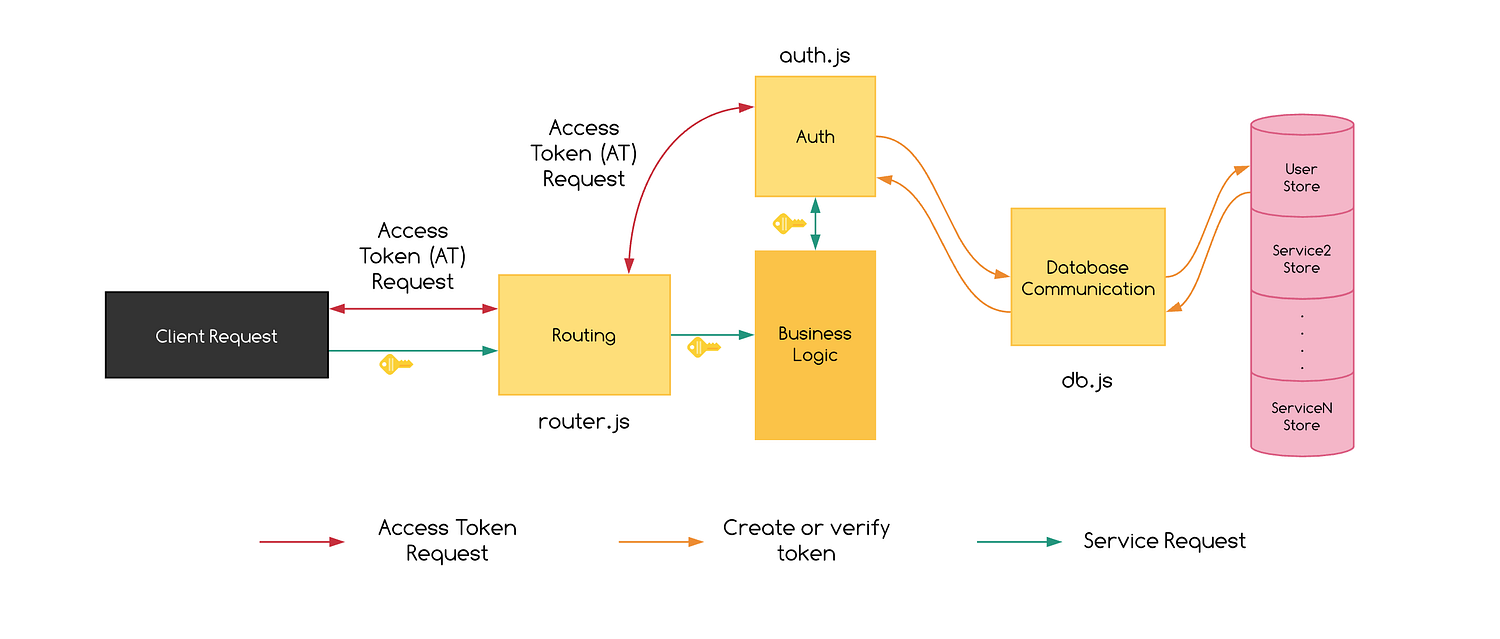

Split each service into specific files and route it using a single router file. The entire code sits inside a single docker image. The image is deployed to a single vertically scaling Kubernetes cluster. Inter-service communication happens through the router, using REST. A single DB contains various documents, scoped by service domains. Native queues are used for asynchronous communication.

Note: While the representation done here is that of a single file for every service, it may require more than 1 file to manage the service and the logic along with a large set of libraries and cross-dependencies.

This structure allows for easy maintenance using a smaller team — service changes are maintained within a single team. Deployment is much easier — a single docker image is replicated. Telemetry information can be obtained from each service separately.

Consider failures — Kubernetes clusters can scale up and out to handle H/W failures without impacting the overall system reliability, for spikes.

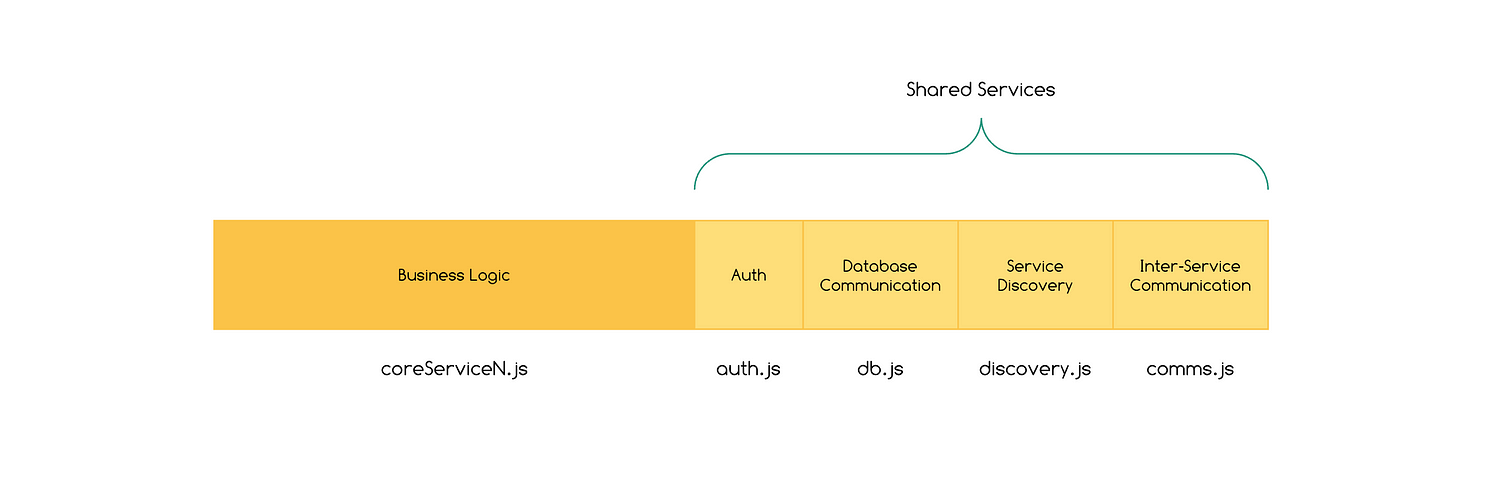

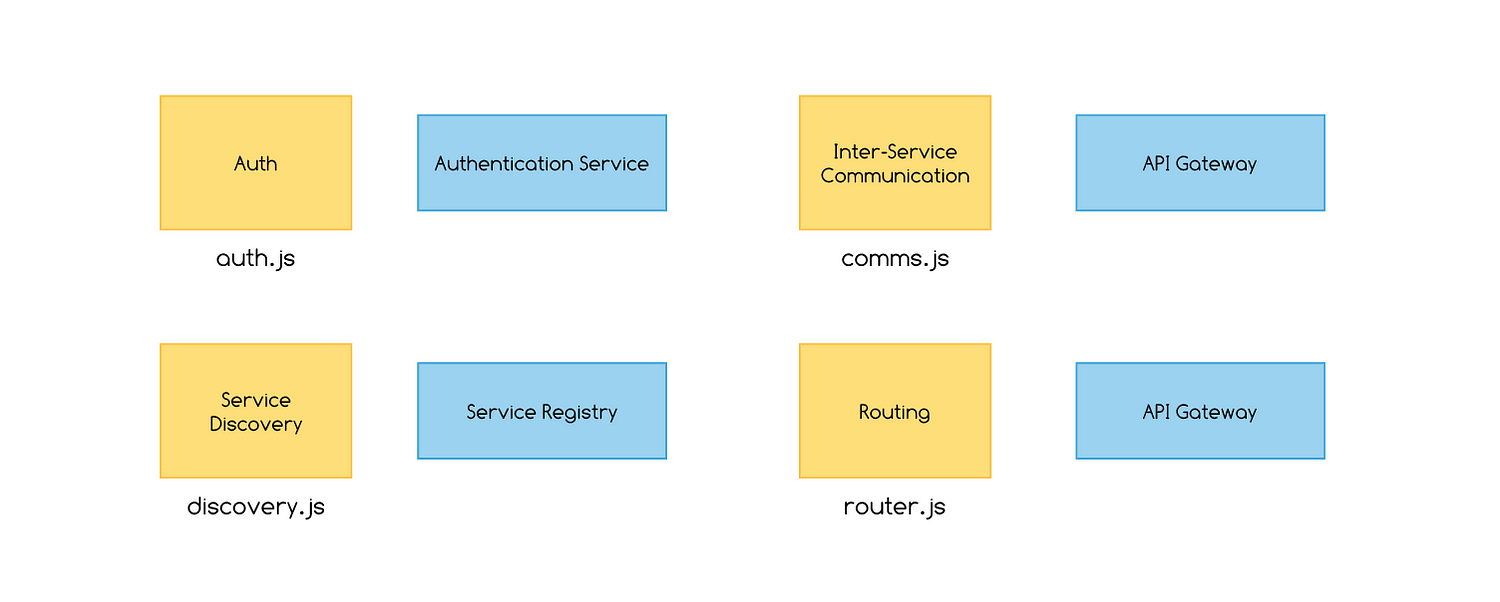

Principle 2: Use swappable modules that emulate MSA components

Within the coreServiceN.js calls are made to the shared services which remain common amongst all the services.

Notice how each of these components stand as proxies to MSA components:

Note: Gateway also does the job of verifying the incoming request structure and ensuring that it matches what the service requires (required fields etc)

Looking at security — in the deflated mode, security is handled at every request — Tokens are issued by authorization service & verified by the requested service.

Yes, it does allow ingress within the network, but not a lot is lost since the requests are routed through the routing.js. Internal redirects are hidden from the user.

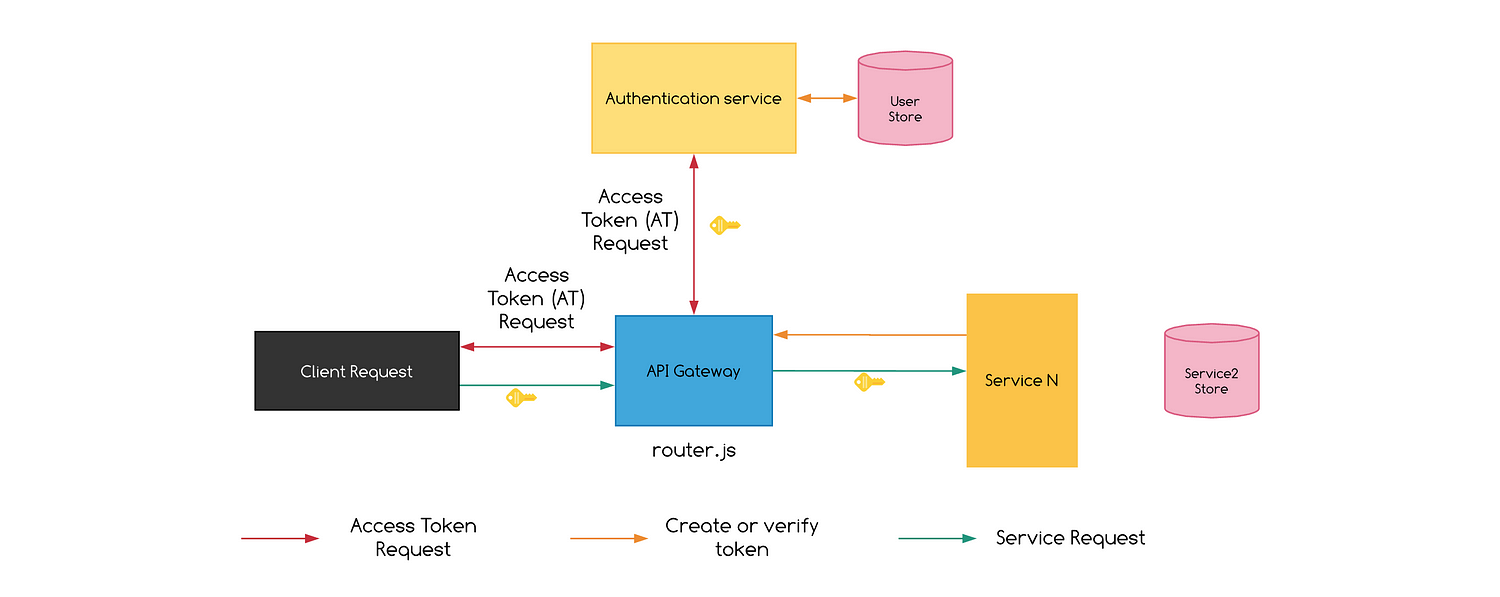

To inflate this architecture, simply move the authorization service outside of service, put an API Gateway that provides and verifies tokens and viola! MSA!

Inflating the deflated MSA

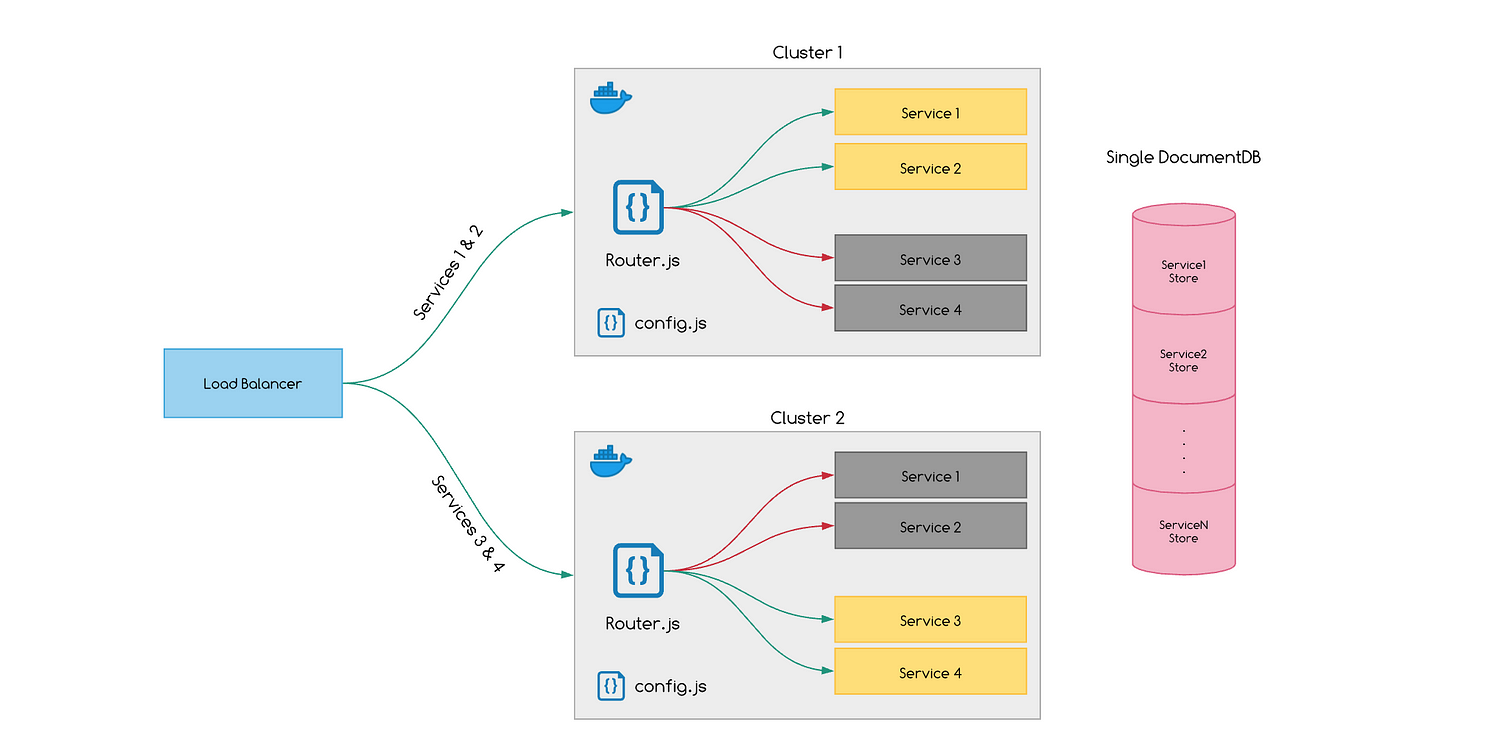

As long as you stick to the principles listed above, inflating this microservices architecture to handle a larger load is simple. Simply use dirty scaling:

Dirty scaling is called for when the product is going through a planned but a one-off burst of request. Typically, when you’re scaling for over 10x-20x burst of requests across a period of a few days, you need not add more resources to the servers. Instead, use a load balancer and replicate the code across multiple clusters:

Again, since the Document storage is highly scalable and self-managed (like Cosmos DB), we need not worry about its reliability. The load balancer allows for clustering the services and passing on the load to either cluster, without impacting any service. It helps to group services that are dependant on each other within each cluster to enable reliable dirty scaling.

Once you have enough cost-benefit and budgets assigned, you can then look at dedicating teams to individual code blocks and maintaining each separately.

For hardcore architects and developers, this would be blasphemy. However, I urge you to consider the beauty of this approach — it finds a balance between the hard-hitting large scale principles of MSA and the organizational needs. In fact, you can use this to justify further budgets and resources once you hit a bottleneck. For the majority of the retailers and service providers, this should work like a charm!

I think the fascination technology teams have with Kafka, MapReduce, Hadoop is justified. They are, after all, the Bugatis of their profession. Nonetheless, we’ve to realize that everything we do is in the service of the customer & the business— whatever provides the customer with the best possible experience, with minimum costs, should be our goal. And it is pretty much possible, especially since till late 2016, Stack Exchange served 200 million requests per day, backed by just four SQL servers: a primary for Stack Overflow, a primary for everything else, and two replicas. They could have gone MSA and added a million different gateways and document stores and queues but found that 4 SQL servers provide a wonderful experience to all their users.